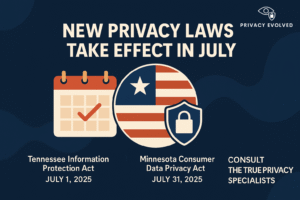

In late July 2025, several major steps were taken in Europe and the United States to regulate privacy, data protection, and artificial intelligence (AI). These updates reflect a growing global focus on balancing technological innovation with the protection of individual rights. We’ll explore three key developments: a new European Commission template for AI training data disclosure, a proposed U.S. law to ban “surveillance pricing”, and an executive order by President Trump to prevent “woke AI” bias in federal agencies. Each of these moves highlights different approaches to ensuring AI and data practices are fair, transparent, and respectful of privacy.

European Commission’s Template for AI Training Data Disclosure

Context and Purpose

On July 24, 2025, the European Commission published a template for General-Purpose AI (GPAI) providers to summarize the data used to train their AI models. This template is a requirement under the EU’s AI Act and is meant to increase transparency about AI training data. It coincides with EU-wide rules for GPAI models that take effect on August 2, 2025, and it aims to help AI providers comply with those rules. By making AI developers publicly disclose the sources and nature of their training data, the Commission hopes to enable content creators and other rights holders to check if their work was used, making it easier to enforce copyright and other legal protections. This step will support trustworthy and transparent AI by helping build trust and unlocking AI’s full potential for society.

Structure of the Template

The Commission’s template is organized into three main sections that GPAI providers must fill out for each model’s training data summary:

- General Information: Details about the AI provider and the model, such as the provider’s identity and the date the model was placed on the EU market. This section also asks for the total size of the training dataset and the data modalities involved (e.g. text, images, video, audio), along with a breakdown of data types within each modality (for example, within text data: fictional texts, scientific publications, news articles, etc.).

- List of Data Sources: A comprehensive listing of where the training data came from. This includes any publicly available datasets (with unique identifiers and links provided), private third-party datasets used under license, and any web-scraped data. For web-scraped content, providers must list the top domains that contributed data – specifically the top 10% of domains for each modality (with a reduced requirement for small companies, such as the top 5% or up to 1,000 domains). This section also covers any data the provider itself supplied to the model (for instance, data generated via prompts or internal data collections).

- Other Data Processing Details: Information on measures taken to respect rights and laws during data handling. Providers should describe how they honored any opt-out requests under the EU’s text and data mining exceptions (which allow rightsholders to forbid data mining of their content). They also must explain any steps taken after data collection to remove or filter out data that is protected (for example, data subject to copyright reservations or other legal restrictions).

This standardized format is intended to give a comprehensive overview of an AI model’s training sources, listing the main data collections and explaining how the data was gathered and processed. It even accounts for disclosure of synthetic data generation and other technical details, creating a uniform reporting format across the industry.

Implications

By mandating these public summaries, the EU aims to strengthen accountability and transparency in AI development. Stakeholders like researchers, downstream AI users, and content creators will gain a clearer window into what information these powerful models were trained on. This could help in assessing potential biases or gaps in the training data and allow copyright holders to detect unauthorized use of their work. However, the new rules also raise industry concerns. Some AI companies worry that revealing too much detail about their training data could expose trade secrets or strategic information about how their models are built. There are ongoing debates about how this transparency might influence copyright litigation as well – for example, non-EU content owners might attempt legal action if they find their data in an AI model’s training set. The Commission has acknowledged the need to balance innovation with rights protection, and it plans to periodically review and refine the template requirements. In essence, Europe’s approach highlights transparency (even at the cost of potential business secrecy) as a cornerstone of trustworthy AI, setting a notable precedent for AI governance.

U.S. Bill to Ban “Surveillance Pricing”

Context and Purpose

In the United States, lawmakers are tackling a different data-driven issue: the use of personal data to unfairly set prices and wages. On July 23, 2025, Congressman Greg Casar (D-Texas) and Congresswoman Rashida Tlaib (D-Michigan) introduced the “Stop AI Price Gouging and Wage Fixing Act of 2025.” This bill targets what Casar calls “surveillance-based price setting,” which is when companies use people’s personal data – such as their browsing history, location, demographics, race, or gender – to charge different prices for the same product or service. Similarly, it addresses wage discrimination through data, prohibiting employers from using personal data to set or reduce someone’s pay (outside of merit or performance factors). The goal is to protect consumers and workers from hidden, algorithm-driven discrimination that they may not even be aware is happening.

Casar’s proposal came on the heels of rising concerns about these practices. In fact, earlier in 2025 the Federal Trade Commission (FTC) released a study showing that retailers frequently use personal information to tailor prices for individuals. Real-world examples have raised eyebrows: for instance, one airline was reportedly considering using AI to adjust ticket prices if it learned a customer had been searching for a family member’s obituary online, interpreting it as a sign the person urgently needs to travel. Likewise, ride-share apps have been suspected of paying drivers less if data indicated the driver visited a pawn shop, possibly signaling financial distress. These scenarios, while chilling, illustrate the very kind of exploitative behavior the bill aims to ban. As Rep. Casar put it, “Whether you know it or not, you may already be getting ripped off by corporations using your personal data to charge you more,” and without intervention “this problem is only going to get worse”.

Key Provisions of the Bill

The Stop AI Price Gouging and Wage Fixing Act proposes several concrete measures to curb these practices:

- Ban on Surveillance Pricing: It would prohibit the use of automated systems to set individualized prices based on personal data. In other words, companies could not charge different consumers different prices for the same product/service by exploiting private data about those individuals. Factors explicitly mentioned include a person’s online browsing history, search queries, purchase history, location data, demographics (like race or gender), or even something as subtle as how their mouse moves on a webpage. All such data-driven price discrimination would become unlawful.

- Ban on Surveillance Wage-Setting: The bill similarly prohibits using automated systems to determine wages based on personal data that isn’t related to job performance. An employer or platform couldn’t pay someone less (or decide their raise) because of, say, their web browsing habits, where they live, or other personal indicators. Setting pay must remain tied to the work itself, not surveillance of a worker’s private life or characteristics.

- Enforcement Mechanisms: To give the law teeth, it empowers the Federal Trade Commission (FTC) and state attorneys general to enforce the pricing rules, and the Equal Employment Opportunity Commission (EEOC) to oversee the wage-setting rules (since wage discrimination falls in their domain). Importantly, the bill also allows for a private right of action, meaning individual consumers or employees could sue companies for violations. Furthermore, it prevents companies from using forced arbitration clauses to block such lawsuits – ensuring aggrieved individuals have their day in court if needed.

- Exceptions for Discounts: The proposed law makes clear that not all price differentiation is banned. It carves out exceptions to allow benign or favorable practices like loyalty program discounts or discounts for certain groups. For example, offering a reduced price to veterans or senior citizens would still be allowed. The key distinction is that these are transparent, group-based discounts or rewards programs, not secretive price hikes based on spying on an individual’s behavior or identity.

Support and Outlook

The push for this legislation reflects growing bipartisan interest in digital fairness. Consumer advocacy organizations such as the Consumer Federation of America and Public Citizen have endorsed the bill, applauding it as a necessary step to protect people from a new form of high-tech discrimination. Public Citizen, for instance, praised the bill for “drawing a clear line in the sand” that companies can offer normal discounts and fair wages, but “not by spying on people”. They argue that surveillance-based pricing and wage-setting are exploitative practices that deepen inequality and strip consumers and workers of their dignity, and this law would give enforcers the tools to stop those abuses.

However, despite support from consumer groups, the bill’s fate in Congress is uncertain. It enters a broader debate on tech regulation in which some lawmakers worry that over-regulating algorithms could stifle innovation or interfere with free-market pricing strategies. There may also be pushback from industry lobbyists who argue that personalized pricing can sometimes benefit consumers (for example, by offering targeted discounts) or that banning it outright could have unintended consequences. Additionally, Congress has been divided on many tech issues, so passing new legislation may be challenging.

Still, the fact that a federal ban on “surveillance pricing” has been introduced – and with an added focus on algorithmic wage-fixing – is significant. It shows U.S. policymakers responding to public concern about AI-driven inequality. Even if the bill faces an uphill battle, it has started a national conversation about how companies use our data in ways that might disadvantage us, and it could spur regulatory agencies or states to take action in the meantime. For consumers and workers, the message is empowering: you “shouldn’t be charged more or paid less because of your data,” as one supporter put it, and the law should protect you from that kind of hidden bias.

Trump’s Executive Order to Prevent “Woke AI” in Government

Context and Objective

On July 23, 2025, President Donald Trump signed an executive order titled “Preventing Woke AI in the Federal Government.” This directive was one of three AI-related executive orders signed that day as part of a broader plan to bolster U.S. leadership in AI. While the other two orders dealt with building AI infrastructure and promoting American AI exports (more on those shortly), this particular order focused on the ideological neutrality and accuracy of AI systems used by federal agencies.

The order’s premise is that AI models, especially large language models (LLMs) used by government, should be free from political or ideological bias. The Trump administration voiced concern that certain popular AI systems had been intentionally or unintentionally skewed by what it calls “woke” ideologies – explicitly calling out concepts associated with diversity, equity, and inclusion (DEI). According to the order’s language, DEI in AI could lead to “suppression or distortion of factual information” and a prioritization of social agendas over truth. The order even cited examples (presumably referring to known incidents with AI models) where an image-generating AI changed the race or gender of historical figures to meet diversity criteria, or a chatbot refused a user’s request in a way that seemed to enforce a particular social viewpoint. These were offered as evidence that “ideological biases or social agendas” can degrade the quality and reliability of AI outputs.

In essence, the goal of the executive order is to ensure that any AI systems the U.S. government procures or uses internally remain truthful, factual, and politically neutral. Trump’s order frames this as vital for national integrity, stating that while the government doesn’t intend to regulate AI in the private sector (at least in this context), it has an obligation to “not procure models that sacrifice truthfulness and accuracy to ideological agendas.” This is presented as building on a prior Trump-era policy (from 2020’s Executive Order 13960 on trustworthy AI in government), but with a new emphasis on eliminating perceived left-leaning or “woke” bias in AI behavior.

Unbiased AI Principles

To achieve these goals, the order establishes two core “Unbiased AI Principles” that federal agencies must require in their AI systems:

- Truth-Seeking: Government-used AI models, especially LLMs, must be truthful and fact-focused when responding to users. The order specifies that models should prioritize historical accuracy, scientific objectivity, and factual correctness. They should also acknowledge uncertainty when information is incomplete or conflicting, rather than confidently asserting false or dubious answers. In other words, agencies should only use AI that is designed to give reliable, evidence-based output instead of misleading or overly speculative responses.

- Ideological Neutrality: AI models should be neutral and non-partisan, avoiding any built-in bias that favors a particular ideology or political stance. The order explicitly says models must not manipulate responses to favor “ideological dogmas such as DEI.” AI developers providing systems to the government should not intentionally encode partisan views or ideological content into how the AI responds – unless such a stance is explicitly requested by the user or is part of the user’s query. Practically, this means a government chatbot shouldn’t, on its own, give a politically slanted answer or promote certain social philosophies. It should behave as a straightforward tool, providing information or analysis without an agenda.

These principles essentially demand that AI systems used by federal agencies behave like neutral, factual assistants, rather than, say, a politically opinionated entity or one that enforces a particular social norm by default. The nickname “woke AI” is used pejoratively by the order to describe AI that the administration believes has been skewed by progressive social values; the solution offered is to mandate neutrality and truth as guiding principles in federal AI procurement.

Implementation Steps

The executive order outlines a process to put these principles into practice:

- The Office of Management and Budget (OMB) is tasked with issuing detailed implementation guidance within 120 days of the order. This guidance will instruct agencies on how to evaluate and enforce the Unbiased AI Principles in any AI contracts or tools they use. In developing this guidance, OMB must consider practical constraints like technical limitations (acknowledging that perfect truth or neutrality may be technically challenging in AI) and avoid being overly prescriptive in ways that could stifle innovation.

- The guidance will also require vendor transparency about how their models work. For instance, AI providers might need to disclose an AI system’s base prompts, design specifications, or results of any bias and performance evaluations. However, the order notes that OMB should avoid demanding highly sensitive details like proprietary model weights, so as not to force companies to give up trade secrets. The idea is to strike a balance where the government gets enough insight to judge a model’s neutrality without dictating exactly how companies build their AI.

- National security exceptions: The OMB guidance can allow exceptions for AI systems used in national security contexts. This acknowledges that intelligence or defense-related AI might have different requirements or might not be feasible to discuss openly, so those could be carved out from these rules if needed.

- Each federal agency, once OMB issues its guidance, will have to incorporate these principles into new AI procurements. Specifically, when an agency buys or signs a contract for an LLM (or potentially other AI tools), the contract must include terms that the AI adheres to the truth-seeking and ideological neutrality principles. Furthermore, agencies should add contract clauses stating that if an AI vendor’s model is later found non-compliant (after a chance to correct issues), the agency can terminate the contract and require the vendor to bear the costs of decommissioning that AI system. This creates a financial incentive for vendors to ensure their models remain in line with the principles or risk losing government business.

- Agencies are also instructed, where practicable, to retrofit existing AI contracts with similar requirements. Within 90 days of the OMB guidance, agencies should establish procedures to review the AI they are already using and push those systems toward compliance as much as possible.

In short, the implementation plan uses the leverage of the federal purse: the government won’t buy or use AI that doesn’t meet these unbiased criteria, and vendors will have to certify and disclose enough to prove their models are compliant.

Implications and Controversies

Unsurprisingly, this executive order stirred debate. Supporters of the administration’s approach argue that it ensures government AI systems remain trustworthy and free from political manipulation, which is particularly important if agencies rely on AI for decisions or public services. They claim that what the order demands is essentially common sense: factual correctness and no political bias, which any taxpayer-funded tool should strive for. In fact, Elon Musk’s startup xAI praised the announcement, seeing alignment with its own mission of building truth-seeking AI. (Musk’s company has explicitly marketed its AI “Grok” as aiming for truth and eschewing political correctness, so the company welcomed Trump’s stance.) It’s also worth noting that the order doesn’t outright ban any content or force AIs to say certain things – it mostly requires transparency and neutrality, which some industry experts have described as a relatively “light touch” approach. From that perspective, it might not be very difficult for AI providers (many of whom already try to make their models objective) to comply, as long as they document their efforts.

However, critics have raised several concerns. Civil rights and tech ethics groups fear that labeling efforts to reduce bias in AI as “woke” could undermine years of progress made to ensure AI treats people fairly. These experts point out that AI systems trained on internet data often inherently reflect biases in society – including racial or gender biases – and that a lot of work has gone into mitigating those issues. An order that discourages focusing on DEI might disincentivize companies from continuing to address discrimination by AI, effectively telling them to ignore bias issues rather than fix them. Detractors also argue that the very term “woke AI” is misleading: “There’s no such thing as woke AI – there’s AI that discriminates, and AI that works for all people,” as one advocate put it. By that logic, striving for inclusion and fairness in AI isn’t an ideological agenda but a necessary part of making AI accurate and useful for everyone.

Another critique is the concern about free expression and the specter of censorship. Some have compared this U.S. move to China’s approach of dictating approved ideology in tech. They worry that requiring ideological neutrality is itself a form of government influence over speech – for example, if an AI won’t answer a question about systemic racism in a straightforward way because it’s afraid to appear “woke,” does that mean the government is suppressing discussion of certain topics? The administration’s defenders counter that the order doesn’t tell AI what not to say, only that any internal intentional bias must be disclosed and that models shouldn’t lie or push an agenda. Even so, the culture-war tone of the order’s framing (specifically targeting DEI concepts) has led to political division. Members of Congress like Rep. Don Beyer (who chairs a bipartisan AI Caucus) called the order “counterproductive to responsible AI development” and “potentially dangerous,” arguing that true neutrality means training on facts and science, but explicitly opposing “woke inputs” in fact bakes in a particular ideological stance.

From the industry’s standpoint, companies like Google or Microsoft – which sell AI services to the government – have been cautious. They largely support broad AI innovation plans but now face the challenge of proving their AI isn’t “woke.” This could mean additional compliance steps or documentation to show how their models make decisions. Some worry this could amount to pressure to err on the side of not addressing bias at all, effectively self-censoring features that were meant to make AI outputs more fair or respectful. On the other hand, the actual requirements might not change much in their development process; it may simply require a paper trail and some extra testing to demonstrate neutrality.

Overall, the order underscores how AI has become entangled in ideological battles. Its impact will depend on how OMB’s guidance comes out and how strictly agencies enforce these rules in practice. It could lead to more transparency from AI vendors and perhaps new “politically neutral” modes for AI models. Or it could have minimal effect if companies find it easy to nominally comply. The controversy, though, highlights a paradox: training AI to be truly neutral and factual is very difficult, given that human language and knowledge are often politically or culturally loaded. As some experts noted, trying to sanitize AI outputs of any perceived bias might even degrade their quality or usefulness. It’s a tricky line to walk, and the coming months will reveal how feasible it is to have an AI that both “doesn’t offend anyone’s ideology” and still speaks truthfully about complex issues.

Conclusion

These recent developments underscore a common theme: as AI technologies advance and permeate our lives, governments are racing to set guardrails that protect people’s rights and values. In Europe’s case, the emphasis is on transparency and accountability – pulling back the curtain on what data fuels AI, so that issues like copyright infringement or bias can be addressed head-on. In the United States, we see a dual focus: tackling the misuse of personal data (through the proposed surveillance pricing ban) to ensure fairness in the marketplace, and addressing the potential ideological slant of AI (through the executive order) to ensure fairness in governance and public discourse.

For businesses, technologists, and the public at large, these moves signal that the era of largely unregulated AI and data practices is coming to an end. Whether it’s an AI model’s training dataset or an algorithm setting prices, regulators want new levels of insight and fairness built into the process. Organizations will need to be more vigilant than ever in how they develop and deploy AI – from documenting their training pipelines to auditing how their AI might affect different groups of people.

Crucially, these updates also highlight the growing importance of privacy and AI compliance expertise. Navigating the patchwork of new rules – such as the EU’s AI Act requirements or upcoming U.S. laws – will be a challenge. Companies and government agencies will benefit from consulting privacy and data protection professionals to ensure they remain compliant with the latest regulations. Such experts can help interpret laws, implement best practices for data transparency, and design AI systems that respect both legal requirements and ethical norms.

In a world increasingly driven by algorithms, it’s clear that privacy and ethical AI are no longer just idealistic concepts, but practical necessities. From protecting consumer rights and preventing discrimination to maintaining public trust in government technology, the responsible handling of data and AI will shape the future of business and society. By staying informed and proactive – and seeking guidance when needed – organizations can innovate with confidence, knowing they are respecting individuals’ rights and staying on the right side of emerging regulations. In short, the partnership between technological innovation and privacy compliance will be key to unlocking AI’s benefits while safeguarding the values we care about.

If your organization is navigating the complex intersection of privacy, data protection, and AI regulation, now is the time to act with confidence and clarity. At Privacy Evolved, we offer specialized, technical consulting services dedicated exclusively to privacy compliance. Our team is ready to help you assess risks, meet global regulatory requirements, and build trustworthy data practices. Visit our website (www.privacyevolved.com) to learn more and book a strategic consultation tailored to your needs.